➣ By Christoph Guger

Figure 1: Intendix running on the laptop and user wearing the active electrodes.

A brain-computer interface (BCI) is a communication channel between the human brain and external devices based on the Electroencephalogram (EEG). Such a system allows a person to operate different applications just by thinking. Two of the most important applications are spelling and environmental control for totally paralyzed patients.

The Spelling System

Such a system was developed recently. The Intendix BCI system was designed to be operated by caregivers or the patient’s family at home. It consists of active EEG electrodes to avoid abrasion of the skin, a portable biosignal amplifier and a laptop or netbook running the software under Windows (see Figure 1). The electrodes are integrated into the cap to allow a fast and easy montage of the equipment. The system allows viewing the raw EEG to inspect data quality, but indicates automatically to the unexperienced user if the data quality on a specific channel is good or bad.

This control can be realized by extracting the P300 evoked potential from the EEG data in real-time. Therefore, the characters of the English alphabet, Arabic numbers and icons were arranged in a matrix on a computer screen (see Figure 2). Then the characters are highlighted in a random order and the person has the task to concentrate on the specific character he/she wants to spell. At the beginning the BCI system is trained based on the P300 response of several characters with multiple flashes per character to adapt to the specific person.

If the system is started up for the first time, a user training session has to be performed. Therefore, usually five to ten training characters are entered and the user has to copy and spell the characters. The EEG data is used to calculate the user specific weight vector which is stored for later usage. Then the software switches automatically into the spelling mode and the user can spell as many characters as wanted. The system was tested with 100 subjects who had to spell the word LUCAS. After five minutes of training 72 % were able to spell it correctly without any mistakes.

The user can perform different actions such as copy the spelled text into an Editor, copy the text into an e-mail, send the text via text-to-speech facilities to the loud speakers, print the text or send the text via UDP to another computer. For all these services a specific icon exists.

The number of flashes for each classification can be selected by the user to improve speed and accuracy or the user can also use a statistical approach that automatically detects if the user is working with the BCI system. The latter has the advantage in that no characters are selected if the user is not looking at the matrix or does not want to use the speller.

VR Control

Figure 2: User interface with 50 characters like a computer keyboard.

Recently, BCI systems were also combined with Virtual Reality (VR) systems. VR systems use either head mounted displays (HMDs) or highly immersive back-projection systems (CAVE like systems). Such a CAVE has three back-projectors for the walls and one projector on top of the CAVE for the floor. The system projects two images which are separated by shutter glasses to achieve 3-D effects.

There are several issues that must be solved to use a BCI system in such an environment. The biosignal amplifiers must be able to work in such a noisy environment, the recordings should ideally be done without wires to avoid collisions and irritations within the environment, the BCI system must be coupled with the VR system to exchange information fast enough for realtime experiments and a special BCI communication interface must be developed to have enough degrees of freedom available to control the VR system.

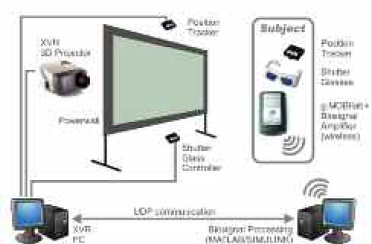

Figure 3 illustrates the components in detail. A 3-D projector is located behind a projection wall for back projections. The subject is located in front of the projection wall to avoid shadows and wears a position tracker to capture movements, shutter glasses for 3-D effects and the biosignal amplifier including electrodes for EEG recordings. The XVR PC is controlling the projector, the position tracker controller and the shutter glass controller. The biosignal amplifier is transmitting the EEG data wirelessly to the BCI system that is connected to the XVR PC to exchange control commands.

Figure 3: Components of a Virtual Reality system linked to a BCI system.

Figure 4: Smart home control sequence and VR representation. The VR environment was developed by Chris Groenegress and Mel Slater from University of Barcelona, Spain.

In order to show that such a combination is possible, a virtual version of a smart home was implemented in eXtreme VR (VRmedia, Italy). The smart home consists of a living room, a kitchen, a sleeping room, a bathroom, a floor and a patio as shown in Figure 4. Each room has several devices that can be controlled – a TV, MP3 player, telephone, lights, doors, etc. Therefore, all the different commands were summarized in seven control masks – a light mask, a music mask, a phone mask, a temperature mask, a TV mask, a move mask and a go to mask. Figure 4 shows the TV mask and as an example the corresponding XVR image of the living room. For example, the subject can switch on the TV by looking first at the TV symbol. Then, the station and the volume can be regulated. A special go to mask consisted of a plan of the smart home and of letters indicating the different accessible spots in the smart home. The letters flash during the experiment.Inside the mask, there are letters indicating the different accessible spots in the smart home which flash during the experiment. Therefore, the subject has to focus on the spot where he wants to go. After the decision of the BCI system, the VR program moves to a bird’s eye view of the apartment and zooms to the spot that was selected by the user. This is a goaloriented BCI control approach, in contrast to a navigation task, where each small navigational step is controlled.

Currently the BCI technology is interfaced with real smart home environments within the EC project SM4all. The project aims at studying and developing an innovative middleware platform for inter‐working of smart embedded services in immersive and person‐centric environments.

Christoph Guger, Ph.D.

g.tec medical engineering

Austria

guger@gtec.at

www.gtec.at

About Brenda Wiederhold

President of Virtual Reality Medical Institute (VRMI) in Brussels, Belgium.

Executive VP Virtual Reality Medical Center (VRMC), based in San Diego and Los Angeles, California.

CEO of Interactive Media Institute a 501c3 non-profit

Clinical Instructor in Department of Psychiatry at UCSD

Founder of CyberPsychology, CyberTherapy, & Social Networking Conference

Visiting Professor at Catholic University Milan.